Finding meaningful insights in end-user feedback has long been a white whale chase for IT pros. What truths lie beneath the murky depths of user opinion, and how can IT track them down faster? More importantly, is doing so even worth IT’s time? This post will present answers to those questions and offer up strategies for how to utilize the input of an increasingly tech-literate workforce to ultimately improve end-user experience and boost employee productivity.

The case for qualitative end-user feedback

It’s no secret that users have a lot to say about their technology. Many IT leaders see this as an opportunity to learn about how business decisions around technology are having an impact on employees.

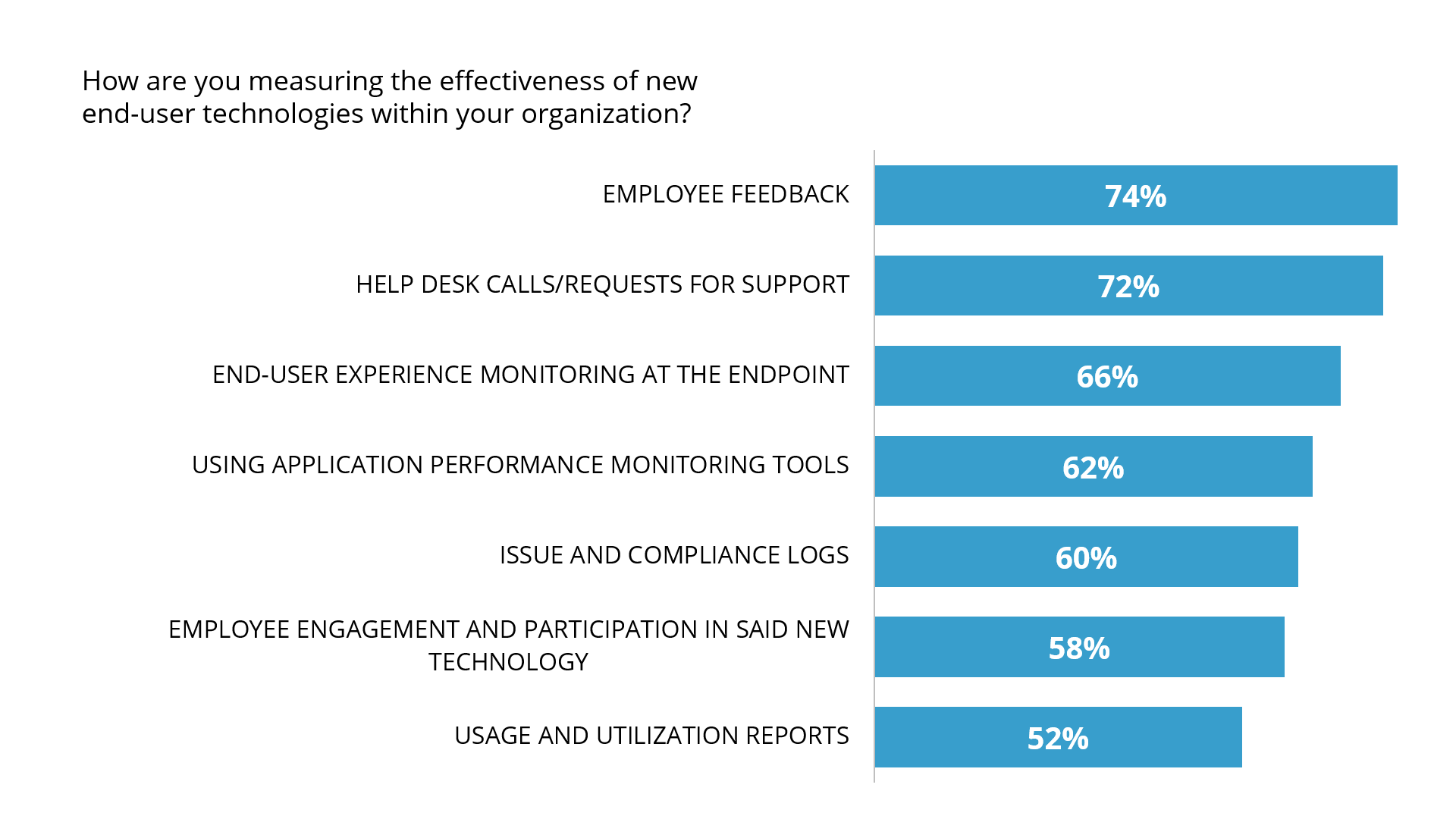

In a recent survey conducted through IDG Research, we asked IT decision makers about what methods they use to measure the effectiveness of new end-user technologies at their organizations. All respondents reported using one or more sources, with 74% of IT leaders identifying employee feedback as a method they use to judge the success of newly-implemented technology.

Access the IDG survey findings here

It makes sense that employee feedback is such a popular way for IT to understand the end-user environment. Firstly, the motivations behind asking end users about their perceptions around workplace technologies are simple:

- It’s cheap (the only cost being the labor involved)

- It provides an outlet for users to express their concerns and frustrations

- It can hold valuable truths: Users are the consumers of technology and, in theory, should be the most attune to how it affects their productivity

Secondly, users are generally responsive and more than willing to discuss their feelings about technology. However, the tricky part about relying on feedback as an indicator is that humans can never be fully objective, even when judging machines (we all remember that scene from Office Space). On top of that, even the most truthful of responses may lack the technical details needed for IT to actually go in and solve user issues.

The primary filter for most employee feedback on technology is the IT help desk. Users call in or file tickets to report on any number of problems they may be experiencing, whether it’s a forgotten password, slow system, or more complex performance issues. Our survey found that 72% of IT leaders use these interactions to measure the effectiveness of new technologies.

But the help desk should not be relied upon as the sole indicator of the success of digital initiatives. The problem with using help desk feedback as a guide is that doing so provides a narrow and heavily skewed picture of the environment. For example, a user who consistently calls the help desk to report issues may consume a disproportionate amount of help desk resources over users with objectively worse experience. Decisions based on feedback from the loudest voices are unlikely to result in solutions that benefit the average user.

That’s not to imply that users have nothing valuable to say. On the contrary, the true value of qualitative data, apart from the ease of obtaining it, is that user sentiment can be equally as valuable as analytical insights. The key is to seek more representative feedback and tailor it to IT initiatives—an approach that can be achieved through end-user feedback surveys that are appropriately presented and analyzed.

Incorporating surveys into your IT strategy

In a B2C context, surveys are widely used to assess consumer opinions on products or services. Based on personal experience, this trend seems to be increasing—even the farm that I buy a produce share from has a star-rating system in their emails. Across industries, businesses are recognizing that there is value in gathering sentiment data straight from the consumer.

But the relationship between IT and employees is slightly different from businesses and customers. For one (excluding cases of outsourced tech support), IT and employees are batting for the same team. Both groups want the business to succeed and see technology as integral to that goal, but their top priorities can differ. Users tend to value mobility and ease of access while IT must factor in security and cost control. Despite their differences, both groups want technology to perform well and users to be satisfied. How can they work together to make that happen?

Just like in the consumer sector, IT teams can use surveys to connect with users and learn about their experiences. One advantage IT has over B2C survey makers is that they don’t need to wait for a purchase to be made or a service interaction to initiate contact. IT is a continuous service and teams can use surveys in creative ways to come out ahead of issues, enabling proactive fixes. Surveys can also be tailored to return information that aligns with a specific IT objective, such as judging the impact of desktop transformation.

However, like help desk calls, surveys alone aren’t enough to meaningfully inform IT strategy. They still present issues of bias, returning users’ perceptions rather than data-driven accounts of what is happening on their systems. How can IT avoid this pitfall? By examining end-user feedback alongside real, user-level endpoint performance and usage data.

Value of combining qualitative and quantitative data

On their own, qualitative and quantitative data only tell one side of the story: the employee’s or the computer’s. Quantitative analytics can show a wide range of performance details about a system; such data can even be rolled up into a score based on key metrics that trends the state of a user’s experience. But we know from conversations with our customers that there are cases where the data on a user’s system may look great, but the user still reports a poor experience.

Download the brief: “Quantify Users for Quality End-User Experience”

By observing qualitative survey data alongside quantitative analytics, IT can identify problems that would’ve been difficult (if not impossible) to discover otherwise. One example of this is when a user is experiencing a low-level performance issue (such as moderate latency) that is having a huge impact on his/her productivity over time, particularly if it’s with a business-critical application. However, such a problem may not be severe enough to affect the overall performance of the user’s system to a degree that is noticeable to IT. By contributing qualitative feedback, a user can seek remediation to a problem that may otherwise fly below IT’s radar.

Another major benefit to this approach is that it allows IT to meaningfully improve service quality by looking for trends in both user sentiment and empirical performance data. An important application of this is the same one we had IT leaders weigh in on: analyzing the effectiveness of new end-user technologies. After deploying a new technology, IT can push surveys to users to gather qualitative feedback. That data can then be compared with performance data on the technology to see if adjustments need to be made, whether that’s enabling users through training or adjusting which users get the new technology. This application is particularly useful in cases where user buy-in is desirable, such as the adoption of new software that was purchased with the intent of improving business processes.

Introducing SysTrack’s award-winning survey functionality

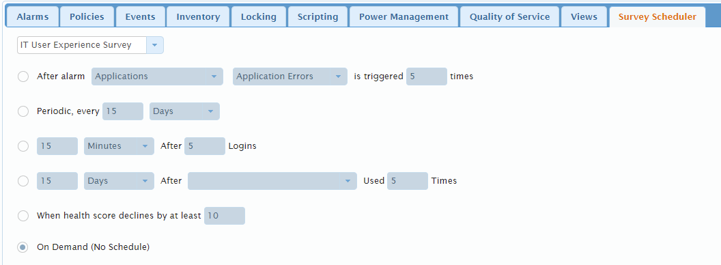

Lakeside has created a tool for gathering end-user feedback that allows IT to design and schedule surveys easily within the SysTrack interface. IT can choose from sample surveys or design a custom one for virtually any use case. Thanks to SysTrack’s robust data-collection engine, the data collected from the surveys can be compared to detailed endpoint metrics to accelerate issue discovery and speed up time-to-resolution. Surveys can be offered on-demand to users or scheduled as part of IT operations. They can even be triggered based on specified events, such as when a user’s experience score drops below a certain percentage or when a system alarm fires.

At VMworld US 2017, the TechTarget judges awarded this functionality as the winner in the End-User Computing, Mobility and Desktop Virtualization category, stating, “Surveying users for opinions of their experience, then correlating that with empirical performance data gathered by their agent allows IT to fine-tune resource allocation and better understand end users’ needs.”

SysTrack surveys in action

An IT admin starts by deciding what they want to learn about their environment. When the subject is identified (in this case, IT user experience), the admin can either choose one of the pre-designed surveys or generate a new one.

Once the survey is made, the admin can choose which users to send it to, when to send it, and under what conditions.

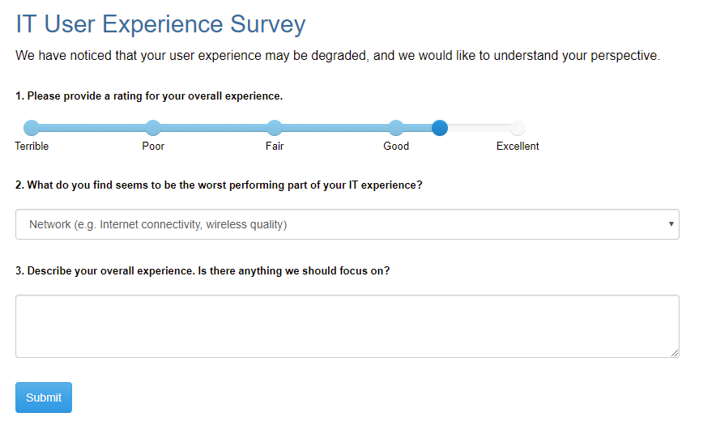

The survey appears as a pop-up on the end user’s screen with an intuitive interface for quickly answering IT’s questions.

User responses can be integrated with dashboards to quickly and easily compare end-user feedback with system performance data.

You can read more about other new SysTrack features here.

Want to see SysTrack surveys in action? Request a demo

Subscribe to the Lakeside Newsletter

Receive platform tips, release updates, news and more