What Does ‘End-to-End’ Monitoring Really Mean?

Gain real visibility across environments for better insights into digital employee experience

The old saying goes that if all you have is a hammer, every problem looks like a nail. And this is certainly true in the IT world.

There are a broad number of vendors and technologies that claim to provide “end-to-end” monitoring of systems, applications, users, digital employee experience (DEX), and so forth as part of a better, more comprehensive digital experience management solution. When one starts to peel back the proverbial onion on this topic, though, it becomes clear that these technologies only provide “end-to-end” visibility if you’re really flexible with the definition of the word “end.”

Let’s elaborate.

A Closer Look at IT Visibility

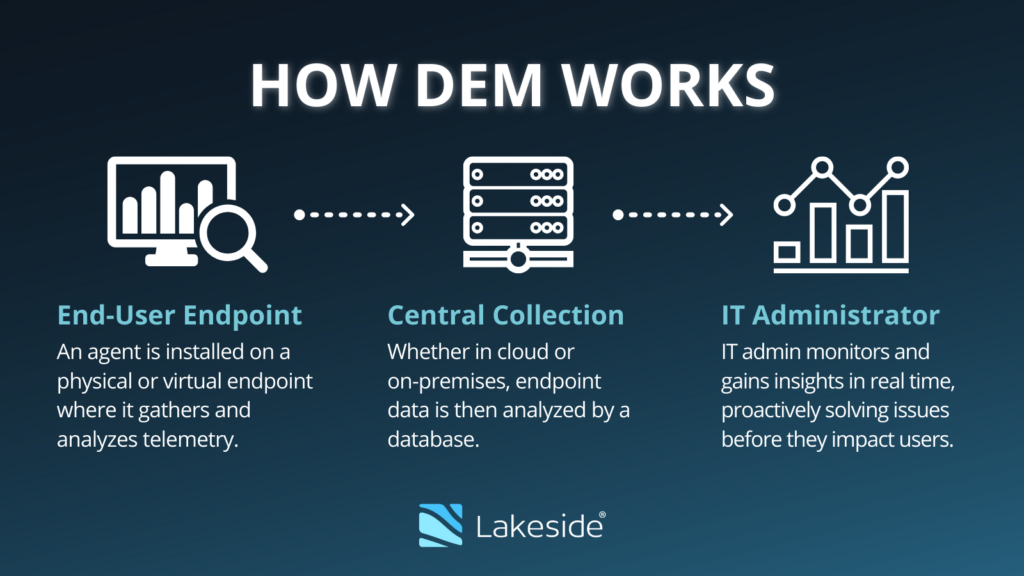

End-to-end monitoring, also known as digital experience monitoring, is the process of analyzing data across infrastructure, devices, applications, and services for a holistic view of digital environments and how end users’ interactions with technology affect the quality of their digital experiences. End-to-end monitoring is a vital component of digital experience management (DEM).

If I am interested in the digital employee experience, also known as end-user experience (EUX), of a given system or IT service, I would certainly start with what impacts end user:

- Is the system responsive to inputs?

- Are the systems free of crashes or annoying application hangs?

- Do the systems function for office locations as well as for remote access scenarios?

- Do complex tasks and processes complete in a reasonable amount of time?

- Is the end-user experience consistent?

These are the questions that business organizations as a whole often care about.

In the world of IT, however, the topic of end-user experience is often discussed in rather technical terms. On top of that, there’s no such thing as a single point of contact for all the systems within a larger IT structure. For example: There is a network team (maybe even split in between local-area networks, wide-area networks, and wireless technologies), there is a server virtualization team, there is a storage team, there is a PC support team, and various application teams, plus many other silos.

So the monitoring tools that are available in the market basically map into these silos as well. Broadly speaking, there are tools that are really good at network monitoring, which means they look at the network infrastructure (routers, switches, and so forth) as well as the packets that are flowing through the infrastructure. Thanks to the seven-layer OSI model, there is data available not only around connections, TCP ports, IP addresses, network latency, but also the ability to look into the payload of the packets themselves. The latter means being able to understand if the network connection is about the HTTP protocol for web browsing, PCoIP, or ICA/HDX for application and desktop virtualization, SQL database queries, etc.

Because that type of protocol information is in the top layer of the model, also called the application layer, vendors often position this type of monitoring as “application monitoring,” although it really has little to do with looking into the applications and their behavior on the system. Despite this kind of application layer detail in the networking stack, the data is not sufficient at all to figure out the end-user experience. We may be able to see that a web server takes longer than expected to return the requested object of a web page, but we have no idea why that might be so. This is because the network monitoring only sees network packets — from the point when they leave one system and are received by another system and then have a corresponding response go the other way — back and forth, but with no idea what is happening on the inside of the systems that are communicating with each other.

The story repeats itself in other silos as well. The hypervisor teams are pretty good at determining that a specific virtual machine is consuming more than its “fair share” of resources on the physical server and is therefore forcing other workloads to have to wait for CPU cycles or memory allocation. They key is that they won’t know what activity in which workload is causing a spike in resources. The storage teams can get really detailed about the sizing and allocation of LUNs, the IOPS load on the storage system and the request volumes, but they won’t know why the storage system is spiking at a given point in time.

The desktop or PC support teams… oh, wait — many of them don’t have a monitoring system, so they are basically guessing and asking users to reboot the system, reset the windows profile, or blame it on the network. Before I get a ton of hate mail on the subject, though, it’s really hard to provide end-user support because we don’t typically have the tools to see what the user is really doing (and users are notoriously bad in terms of accurately describing the symptoms they are seeing).

Then there’s application monitoring, which is the art and science of determing a baseline and measuring specific transaction execution times on complex applications such as ERP systems or electronic medical records applications. This is very useful to see if a configuration change or systems upgrade has a systemic impact, but beyond the actual timing of events, there is little visibility into the root cause of things. (Is it the server, the efficiency of the code itself, the load on the database, etc.?)

Why End-to-End Monitoring Is Essential

What all this leads to is that users may experience performance degradation that impacts their quality of work (or worse, their ability to do any meaningful work), and each silo is then looking at their specific dashboards and monitoring tools just to raise their hands and shout “It’s not me!” That is hardly end-to-end, but just a justification to carry on and leave the users to fend for themselves.

Most well-run IT organizations actually have a pretty good handle on their operational areas and can quickly spot and remediate any infrastructure problems. However, the vast majority of challenges that impact users directly but don’t lead to a flat out system outage have to do with competing for resources. This is especially true in the age of server-based computing and VDI. One user is doing something busy and all other users who happen to have their applications or desktops hosted on the same physical device will suffer as a result. This is exacerbated by the desire to keep costs in check by sizing VDI and application hosting environments with very little room to spare for flare-ups in user demand.

This is exactly why it is so important to have a digital experience monitoring solution that has deep insights into the operating system of the server, virtual server, desktop, VDI image, PC, laptop, and other endpoints to help discern what is going on, which applications are running, crashing, misbehaving, consuming resources, etc. Only a comprehensive, holistic monitoring technology (combined with the infrastructure pieces mentioned above) can provide true end-to-end visibility into digital employee experience, which is essential for effective digital experience management. Because it’s one thing to notice that there is a problem or “slowness” on the network and it is something else entirely to be able to pinpoint the possible root causes, establish patterns, and then proactively alarm, alert, and remediate those issues.

How Lakeside Provides End-to-End Visibility

Speaking to IT organizations, system integrators, and customers over the years reveals one common theme: IT administrators would like to have ALL of the pertinent data available AND have it all presented in a single dashboard or view. Vendors are just responding to that desire by referring to their products as “end-to-end,” even though most of the monitoring aspects are not end-to-end at all, as explained above.

If you have the same requirement, have a look at Lakeside Software’s Digital Experience Cloud, powered by SysTrack. Our is the leading tool to collect thousands of data points from PCs, desktops, laptops, servers, virtual servers, and virtual desktops, and can seamlessly integrate with third-party sources to provide actionable data that can be viewed in one place.

We’re not networking experts in the packet analysis business, but we can tap into data sources from networking monitors and present it along with the user behavior and system performance. That is a powerful combination of granular data and provides truly end-to-end capabilities as a system of record and as part of a successful digital experience managment platform.

Subscribe to the Lakeside Newsletter

Receive platform tips, release updates, news and more